EPIPHANIES FOR EVERYBODY

The Impact Of Setting Targets

The Impact of Setting Targets

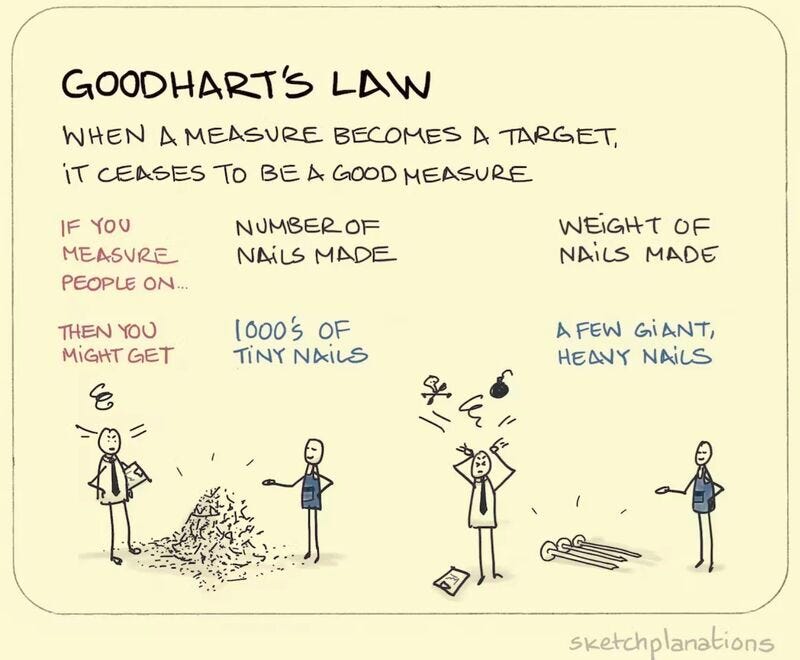

There’s a graphic making its way around LinkedIn. Perhaps you seen it?

It correctly states Goodhart’s Law, and then uses incorrect illustrations to elaborate. I’ve seen it enough times that I think it’s worth pointing out where it goes wrong.

Goodhart’s Law doesn’t say, “Don’t measure things,” It says, “Don’t turn your measurements into targets.”

In the example graphic, maybe the company sells 3000 nails per day. Maybe they aren’t making enough. So management creates a target of 3000 nails. Now people know what to do, right? Wrong. If the system they’re in doesn’t enable the creation of 3000 nails/day, people will cut corners to meet the number — quality, for example, will degrade; nails will be shipped that would previously have been reworked due to QC problems. If the machine makes smaller nails faster, nails will probably get smaller, even if that’s not what customers need. The system needs to change, but the burden has been placed on the people.

Let me illustrate with a real example from a company I worked with (I was working in a different area, so I was an observer of what was going on, not a participant in rectifying it). We’ll call it Innosure (I hope there’s no real Innosure out there). For years, Innosure had been struggling with major outages, downtime, data corruption, and other major issues. So far, they had managed to keep it out of the public eye, but the board thought it was only a matter of time until something happened that was so big it couldn’t be kept out of the papers.

Innosure’s board decided that something had to be done. After much deliberation, they settled on a plan: set a limit to the number of allowed P1 and P2* outages that were acceptable; gradually reduce the nuber every year until the company was down to zero; introduce consequences for failure to keep outages below the target; and require reporting on very P1 or P2 outage, so they were in the loop about what was going on. Their thinking was that setting a target of this nature would encourage people to focus on the things that tended to fail, and improve them, so that they didn’t fail. And requiring them to report on them would ensure that they weren’t simply hidden from the board’s sight. All very reasonable.

Photo by Kind and Curious on Unsplash

Unfortunately, things didn’t work out the way the board thought they would. In fact, things got worse. But why? In order to understand why their mandate failed, it’s important to understand a little of how Innosure worked.

The way Innosure’s systems worked (development, product, support, infrastructure, and all the interconnected people) was that every year, leadership would set a budget for what they expected to spend over the course of the year. They would also come up with changes and new features they wanted added to the software. These additions would be prioritised and incentivised. And each team, or each area, would be responsible for their delivery. These items always superseded attempts to create more stability, deal with old debt, or otherwise improve existing systems (there’s a lot to unpack to understand why this happened, so for now we’re just going to say that it happened).

Innosure’s board and executive also weren’t aware of the level of debt and fragility in the system, so when it came time to budget, they were informed mostly by previous budgets, and not by the significant investments required to stabilise or improve their existing technical systems.

When the mandate was handed down to people responsible for doing the work, they knew it was impossible to achieve the target by doing things the right way — the rest of the organisation wasn’t aligned behind the changes that would have to happen to reduce outages, so none of their normal workload would be reduced in order to achieve the target. Did they let that stop them? Of course not. They had a target, and bonuses riding on achieving them.

That year, they hit their target. But knowing what we know about the organisation, we can be sure they didn’t do it by doing what the board wanted them to do. So, how did they achieve it? In the end, it was rather simple. As the year went on, and they got closer and closer to hitting the target, they would start recategorising outages from P1 or P2 down to P3 or P4. That way the board didn’t have to be told about them, and it wouldn’t affect their bonuses or push them over the target. It was genius… and terrible for the organisation.

During a P1 or P2 outage, the entire organisation is mobilised to reduce the impact, recover quickly, and ensure no customers are impacted more than is absolutely necessary. Time and money were spent on rectifying any problems that occurred during a P1 or P2, in order to keep customers from getting upset. By incorrectly categorising P1 and P2 outages as P3 or P4 outages, the following things happened (or didn’t):

Non-technical teams were not mobilised to ensure customers remained satisfied

The board was not notified of major outages

Teams outside those that were immediately affected weren’t available to help resolve problems

Things got worse. At the end of year 1, the board saw that their plan was working, so they continued with it. As far as they knew, their target was effective. Numbers don’t lie.

During year 2, things got more fragile. As the appetite for P1 and P2 outages continued to decrease, the number of real P1 and P2 outages increased. But because of the numbers, there were no questions about why there were more outages, or what would have to happen to reduce them. They were on their way down, after all.

There is a fix for this problem. The first step is understanding how bad the problem is. And that won’t happen while the target exists. Removing the target allows more honest reporting and measuring of what’s going on. That enables the executive and the board to understand the scale of the problem. And it enables them to try new things to reduce outages. By keeping P1 and P2 outages as a measure, instead of as a target, the variability in frequency can be identified, which then allows the organisation to start identifying patterns and causes (maybe outages go up after big releases, or when the main database server runs out of memory, or when a certain faulty router has a blip).

By keeping the data as raw as possible, it’s possible to see if particular interventions are effective, or they make things worse. Each time we try something, we can see the impact. It might take time to see it, but we will be able to. As soon as we turn the measure into a target, we skew behaviour. And it’s incredibly difficult to know how we will end up shaping behaviour. Since there are numerous ways the new behaviour could be harmful, and only 1 or 2 ways it could be helpful, we will almost always end up with unintended, harmful behaviour.

Once it’s clear what’s happening to outages, the second step becomes possible — learn how the various incentives and priorities being set impact the organisation’s ability to stabilise and modernise the existing technical systems.

Step 3 is to then gather information on what investments would be necessary to start improving the outage data. How to fix or eliminate the patterns (smaller releases, fragmenting the single database, replacing the router, etc), thereby reducing aggregate outages.

Step 4 is to implement a change, measure its impact, and decide whether it was an intervention worth keeping, or whether it needs to be removed.

Without Step 1, however, none of the other steps are ever seen or understood, because the scale of the problem can never be understood.

if management sets quantitative targets and makes people’s job depend on meeting them, “they will likely meet the targets — even if they have to destroy the enterprise to do it.” -Deming

Ultimately, the measure was never the problem. The measure is just data. It’s how the measure is used, and whether it’s made into a target, that determines whether there’s a problem.

* Lots of companies define their own outage scales. What’s important is that a P1 outage usually refers to something that takes the entire system down for all customers. A P2 outage is something that takes the entire system down for some customers, or takes part of the system down for all customers. P3 outages are those that take a part of the system down, but there’s a workaround. Severity is normally a 4 or 5 point scale.)

Subscribe to my Newsletter

Copyright © 2026 Noah Cantor Ltd. All Rights Reserved.